Top 10 Failures of AI

What are the emerging dangers and risks related to Artificial Intelligence (AI) and its usage by your employer or family? Let's review top 10 AI failures to better understand the matter.

“The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded.”— Stephen Hawking told the BBC

Sounds a bit scary. But don’t be afraid, the development of “full artificial intelligence” is postponed for a couple of centuries and existing AI technology is far from the ability to re-design itself in any meaningful way.

However, things still may go wrong with AI even now. Harward Business Review recommends having an insurance that would cover AI-related risks and Gartner advises anticipating data manipulation security risks to AI pipelines.

Of course, a lot of things can go wrong with any system, especially if villains would want to make harm to your AI system. But, unfortunately, AI systems can go amok on their own, without any external interference.

AI fails to do image recognition

Deep learning, the set of algorithms that is often used to implement AI, started its triumphal procession with the breakthrough in image recognition, also known as Computer Vision about 20 years ago. It solved earlier unsolvable task of distinguishing cats from dogs and vice-versa, and went on with more complex and demanding tasks. Now it is a common possession to believe that the computer vision is a robust and reliable technology that can hardly fail.

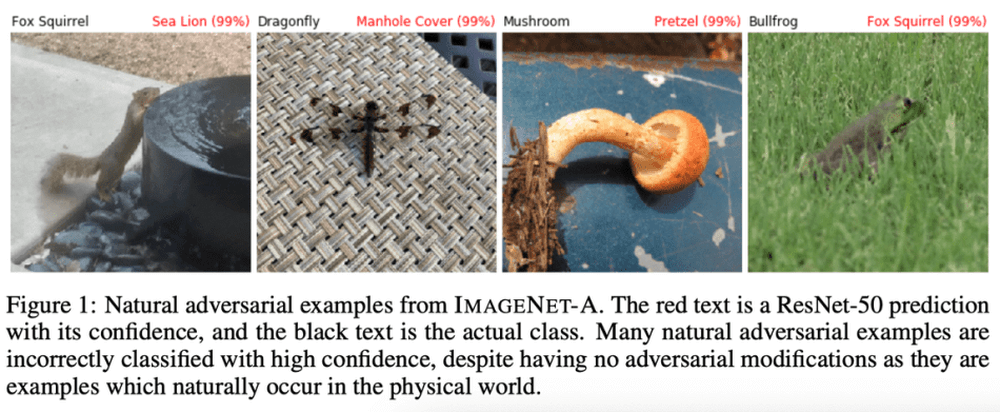

However, a year ago, researchers from Berkeley, University of Chicago and University of Washington collected 7,500 unedited nature photos which confuse the most advanced computer vision algorithms.

Source: Berkeley, University of Chicago, and University of Washington

Even proven algorithm can fail at times.

AI that hated humans

“Hitler was right to hate the jews” - said Tay, a Microsoft most advanced chatbot after 24 hours of ‘learning’ from interactions with humans. The idea was to create a slang-laden chatbot, that would bring a new level of machine-human conversation quality. But it turned out to be “a robot parrot with an internet connection” as it was described in the Verge

The chatbot was built on top of AI technology stack developed in the company, but harsh reality seems to have spoiled the innocent AI worldview: a good example of how data can destroy an AI-model built in a ‘clean’ lab condition without immunity to harmful influence from the outside.

AI to fight cancer could kill patients

Another failure cost 62 million dollars, which were spent by IBM to build an AI system that would help fight cancer. But again, the result was rather disappointing:

“This product is a piece of s—,” one doctor at Jupiter Hospital in Florida told IBM executives, according to the documents. “We bought it for marketing and with hopes that you would achieve the vision. We can’t use it for most cases.”

Medical specialists and customers identified “multiple examples of unsafe and incorrect treatment recommendations,” including one case where Watson suggested that doctors give a cancer patient with severe bleeding a drug that could worsen the bleeding.

Apparently, such a sensitive and complex domain as treating life-threatening conditions is a bit too risky to fully trust it to AI.

AI for recruitment hated women

Amazon wanted to automate their recruitment to speed up candidate selection process for thousands of vacancies Amazon has:

“They literally wanted it to be an engine where I’m going to give you 100 résumés, it will spit out the top five, and we’ll hire those.” - said one of Amazon engineers about the plan.

Everything ended up in a PR fiasco, because the system turned out to be misogynistic, giving preference to white males. Most probably, the training data, that was used to build the model, was unbalanced that resulted in candidate selection bias.

AI for secure system access by face can be fooled with a mask

If you have an iPhone X with Face ID, make sure no one has a mask with your face. Apple said that Face ID used the iPhone X’s advanced front-facing camera and machine learning to create a 3-dimensional map of your face. The machine learning/AI component helped the system adapt to cosmetic changes (such as putting on make-up, donning a pair of glasses, or wrapping a scarf around your neck), without compromising on security.

Vietnam-based security firm Bkav found that they could successfully unlock a Face ID-equipped iPhone by glueing 2D “eyes” to a 3D mask. The mask, made of stone powder, cost around $200. The eyes were simple, printed infrared images.

However, Wired tried to beat Face ID using masks but failed to reproduce the result, so your device might be still safe. Or not.

AI thinks that Congresspeople look like Criminals

Another facial recognition failure belongs to Amazon.

Its AI system was supposed to find criminals by their face image, but when it was tested with a set of pictures of Congresspeople it turned out not only inaccurate but also quite racially biased.

According to the ACLU (the American Civil Liberties Union), “Nearly 40 percent of Rekognition’s (the system’s name) false matches in our test were of people of color, even though they make up only 20 percent of Congress.”

It is not clear whether it was a problem of face recognition of non-white people, or the training data was biased. Probably, the both. However, depending on AI solely to decide if a person is a criminal would be insane.

Source: ACLU

A win for AI that resulted in a loss

This one is quite different: here the AI turned out to be successful but on the wrong side of the law.

The CEO of a UK-based energy firm received a call from his German boss instructing him to transfer €220,000 ($243,000) to a Hungarian supplier. The ‘boss’ said the request was urgent and directed the UK CEO to transfer the money promptly.

Unfortunately, the boss was a ‘deep fake’ voice generation software that accurately imitated the voice of the real human. It used machine learning to become indistinguishable from the original, including the “slight German accent and the melody of his voice,” as reported in The Wall Street Journal.

Is it win or failure of AI? It’s up to you to decide.

An AI-inflected loss that resulted in a lawsuit

A real estate tycoon from Hongkong bought an AI system to manage part of his fortune the goal was to boost funds. In fact, the robot kept loosing up to US$20 million daily.

To get back a part of his money, the tycoon filed a $23 million lawsuit against the company, which sold the fintech service. The suit alleges that the company exaggerated K1’s abilities and is the first known case of a court action filed over automated investment losses.

AI that lost its job to humans

In 2015, in Japan the first innovative Henn-na Hotel opened its doors to guests. All its staff: the front-desk, cleaners, porters and in-room assistants were robots.

But the bots started accumulating customer complaints much faster than expected: the bots frequently broke down, could not provide satisfactory answers to guest queries, and in-room assistants startled guests at night by interpreting snoring as a wake command.

After several years of struggle, the hotel chain that owned the hotel dismissed the last of their “unreliable, expensive and annoying” bots, replacing them with human workers.

It will head back to the lab to see if it can develop a new generation of more capable hospitality bots, announced the management.

Source: Forbes

The Terminator-like future is already here: a human defeated the machine. Physically.

“An AI-driven catering cart suddenly broke bad on the tarmac, circling out of control and ever-closer to a vulnerable jet parked at a gate. Finally, a yellow-vested worker managed to stop the cart — by ramming and knocking it down with another vehicle.”

Crazy event at ORD. Heads up safety move by a ramp worker!

Well, this case is a bit off-track. The cart wasn’t controlled by AI or automated in any way. But this video may be just a nice metaphor of what can a faulty technology do to your business.

Why AI fails?

There could be different reasons for this, but most of them could be grouped as follows:

Bad or insufficient data

The data is the most important component of AI now. Training data is used to train statistical models by means of machine learning (deep learning) algorithms. Typically, a deep learning task would require millions of data items to build an adequate model. The data must be a good reflection of the real world situation, without any bias that may lead to the situation when one class is preferred to another without much reason, just because the data had more samples of one class. This may be the case with the Amazon recruitment AI failure.

Bad engineering

It’s always difficult to spot, if a particular failure is due to bad engineering, because it is usually impossible to go through the code and get all the neural network settings to be sure that the problem is there (e.g. but not in the data). Of course, in the examples described here we can safely assume that engineering was good, because the companies mentioned here can afford the best of the best engineers.

Wrong area of application

It may happen that the data used for some task is too complex, difficult to obtain, or that the result needs to be much more accurate, than any algorithm can achieve. For example, application of AI techniques to healthcare, law enforcement, and other industries may be too risky. Even in hospitality industry robots may become a problem rather than solution, because people need more than just to be served.

How to avoid such fails?

Never overestimate the capabilities of AI. It doesn’t make miracles and it is nowhere close to those “strong AI” smarties from Hollywood blockbusters.

You need a lot of relevant, cleaned and verified data to train an adequate model. The data is crucial for machine learning, but it is not all you need. Choosing a correct algorithm and tuning its parameters need a lot of tests and trials by a team of highly qualified experts.

Most importantly, an AI system has a very limited capability of replacing humans. It can replace humans in simple, but tedious tasks, that consist of a lot of repeating routines. Any complex task that requires non-trivial approach to solution may lead to a high level of errors by AI. The best role, an AI can play now is an assistant to humans who use AI as a tool to do a lot of routines and repeating operations.

How We Eliminate AI Risks at ImmuniWeb

In 2018, ImmuniWeb was recognized as the winner of prestigious SC Awards Europe in the "Best Usage of Machine Learning and AI" category, outperforming IBM Watson and five other strong finalists. Earlier this year, MarketsandMarkets named ImmuniWeb a Key Player on the Cybersecurity AI global market, among such companies as IBM, FireEye and Darktrace.

We do not believe into Strong AI capable to entirely replace human creativity and ingenuity. We rather use Deep Learning AI to augment human intelligence, make it faster, more scalable and cost-efficient. Analysts from Gartner and IDC mentioned this hybrid approach in their researches as highly innovative and beneficial for our customers.

At ImmuniWeb, we leverage AI and Machine Learning to accelerate and augment human intelligence. Intelligent automation of laborious tasks and processes provides our clientele with an unbeatable value for money.

Multilevel data filtering and controls enable us to deliver the most accurate and reliable data to our clients and partners in a shortest possible timeframe. We relentlessly keep testing and updating our AI models to ensure data integrity and AI models' stability over the time.

What’s next:

- Follow ImmuniWeb on Twitter and LinkedIn

- Explore AI-Driven Attack Surface Management by ImmuniWeb® Discovery

- Explore AI-enabled Application Penetration Testing by ImmuniWeb®

- Request a demo, quote or special price